This project was submitted to HackED 2025 and won 2nd place in the DivE Category (Diversity in Engineering).

SightSense is a device with 2 components:

-

AI-Powered Object Recognition with Speech Integration: We leveraged OpenCV and TensorFlow’s COCO Object Recognition API for real-time object detection and integrated Python speech recognition libraries for voice interaction.

-

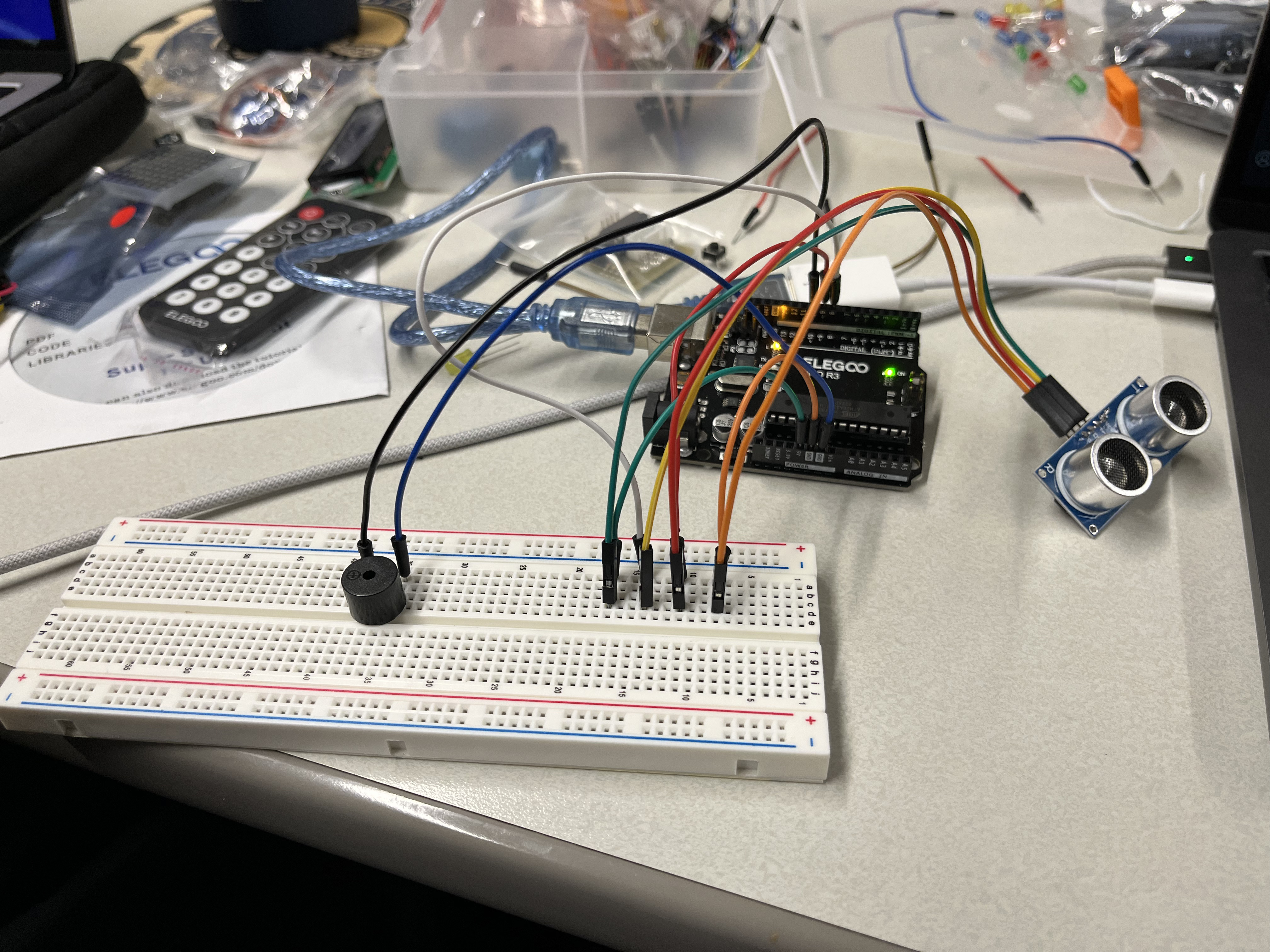

Arduino-Based Crash Detection System Using an ultrasonic sensor, active buzzer, and a 9V battery, we built a portable device that alerts users with sound when they are about to collide with an object.

None of us had any Arduino experience (we are all computer science majors) so we had to learn the basics of circuits and electricity while learning how to use an Arduino. I vividly remember pulling out my iPad and doing Ohm’s Law to calculate what type of resistor we need.

On the other hand, the computer vision component was also a learning curve as we had to learn the basics of using a pre-trained model with Tensorflow. The most challenging aspect was connecting the speech recognition with the visual object recognition model to produce our final product. In fact, we ended up having to use multithreading to do so (shoutout to [CMPUT 379] lectures for saving the day). Who knew paying attention in class could come in handy during a hackathon?

Here is the demo video on [Youtube]

Check out the [website] we built to showcase it!

Check out the [Github repo]